Lockfree ring buffer in Rust

Introduction

After tinkering with a custom Raft implementation, I used channels to share data between modules. I leaned on the Tokio library’s channels, which are blazing fast. But channels are a solved problem, and I hadn’t yet put my own spin on them. So, I decided to roll my own from scratch.

First iteration

A natural starting point for implementing a channel is to wrap an array (static or dynamic) in a lock, such as a Mutex or RwLock, or use a semaphore to control access. This approach works well in some scenarios, is efficient enough for many use cases, and is straightforward to implement, as shown below:

;

But this isn’t that exciting… This implementation is simple, efficient, and easy to understand. However, it didn’t feel like my creation. I wanted to build something ‘from scratch’, without relying on locks.

Lockfree ring-buffer

When you think in performance and arrays, one structure that come to mind is usually a ring buffer. The idea is simple: you cycle through a fixed-size array, overwriting elements that have been read. You track the read and write positions with a head and tail.

The Slot is a structure that lets us track whether a slot has been read or written:

A lock-free data structure enables multiple threads to access it concurrently without using locks. Instead, it relies on atomic operations, low-level CPU instructions that execute indivisibly, ensuring thread safety without blocking. To acheive lockfree concurreny you dont need a lot of code, it is easier that it seems. Peek at how Mutex or channels are implemented in Rust’s standard library, and you’ll see they use the same atomic tricks.

This is what the push method looks like in my lockfree ring-buffer implementation. In the push method, we first load the current tail index atomically. We check if tail is still behind head (indicating available slots in the buffer). If so, we attempt to increment tail using compare_exchange_weak to reserve a slot. If the operation fails (due to concurrent modifications), we retry using a spin loop, which avoids blocking and allows other threads to proceed. Once tail is incremented, we compute the array index and access the corresponding slot. We verify the slot’s sequence number matches the expected index, ensuring it’s ready for writing. If not, we spin and retry. Once ready, we write the value to the slot and increment its sequence number to signal completion. The sequence number tracks the slot’s state: adding N signals it’s ready for writing, while adding 1 signals it’s ready for reading. This ensures correct synchronization between producers and the consumer.

To build a lock-free data structure, you need to understand atomic operations and memory ordering. The ordering semantics, Acquire and Release, are similar to those used in locks. The Acquire ordering ensures that loads see the latest state modified by other threads. The Release ordering ensures that stores make prior writes visible to other threads. The compare_exchange_weak with AcqRel combines both semantics to safely update tail while maintaining consistency.

You can easly extrapolate and figure out what the read method looks like.

Making the structure faster

This block of code boosted my implementation’s performance by 20%. This struct aligns data to the CPU’s cache line size, reducing false sharing between threads. False sharing occurs when multiple threads access different variables on the same cache line, causing unnecessary cache invalidation. By padding the indices, we ensure each resides on its own cache line.

/// Pads and aligns a value to the length of a cache line.

// Starting from Intel's Sandy Bridge, spatial prefetcher is now pulling pairs of 64-byte cache

// lines at a time, so we have to align to 128 bytes rather than 64.

//

// Sources:

// - https://www.intel.com/content/dam/www/public/us/en/documents/manuals/64-ia-32-architectures-optimization-manual.pdf

// - https://github.com/facebook/folly/blob/1b5288e6eea6df074758f877c849b6e73bbb9fbb/folly/lang/Align.h#L107

//

// ARM's big.LITTLE architecture has asymmetric cores and "big" cores have 128-byte cache line size.

//

// Sources:

// - https://www.mono-project.com/news/2016/09/12/arm64-icache/

//

// powerpc64 has 128-byte cache line size.

//

// Sources:

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_ppc64x.go#L9

// arm, mips, mips64, and riscv64 have 32-byte cache line size.

//

// Sources:

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_arm.go#L7

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_mips.go#L7

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_mipsle.go#L7

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_mips64x.go#L9

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_riscv64.go#L7

// s390x has 256-byte cache line size.

//

// Sources:

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_s390x.go#L7

// x86 and wasm have 64-byte cache line size.

//

// Sources:

// - https://github.com/golang/go/blob/dda2991c2ea0c5914714469c4defc2562a907230/src/internal/cpu/cpu_x86.go#L9

// - https://github.com/golang/go/blob/3dd58676054223962cd915bb0934d1f9f489d4d2/src/internal/cpu/cpu_wasm.go#L7

//

// All others are assumed to have 64-byte cache line size.

You’ll find this trick in Rust’s standard library channels and the crossbeam crate. I shamelessly borrowed it from Rust’s source code. Essentially, it makes your indices larger to optimize cache access.

Where is this used? Right here:

On the indeces.

Measurements

Alright, let’s get serious for a second, we’re not just throwing code at the wall to see what sticks. To make sure my lock-free ring buffer wasn’t a complete disaster, I benchmarked it. And honestly? It’s pretty darn good. But it’s up against a heavyweight: Rust’s standard library MPSC channel. For comparison, I also tested two naive implementations: a VecDeque wrapped in a Mutex and one in an RwLock.

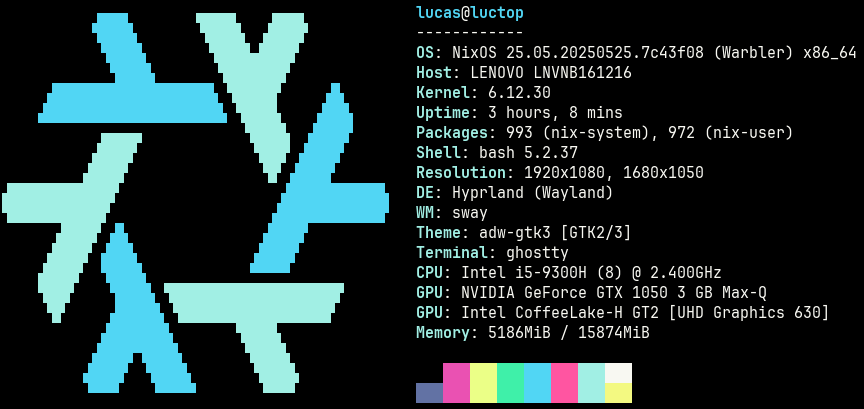

The benchmarks were run using the criterion library, which gave me nice, reliable measurements. Tests were conducted on my machine

with the following setup:

const CAPACITY: usize = 1024;

const OPERATIONS: usize = 100_000;

The tests cover two scenarios: single-producer single-consumer (SPSC) and multiple-producer single-consumer (MPSC). They’re straightforward: one or more threads write values to the buffer, and another reads them as fast as possible.

SPSC

In the single-producer single-consumer scenario, one thread writes to the buffer while another reads from it.

The average times are:

- My ring buffer: 6 ms

- Mutex: 10 ms

- RwLock: 12 ms

- Standard MPSC channel: 3 ms

Here’s an example of the test: Example:

let buffer = new;

let producer_buffer = buffer.clone;

let consumer_buffer = buffer.clone;

let producer = spawn;

let consumer = spawn;

producer.join.unwrap;

consumer.join.unwrap;

MPSC

In the multiple-producer single-consumer scenario, two producers write to the buffer while one consumer reads.

The average times are:

- My ring buffer: 14 ms

- Mutex: 26 ms

- RwLock: 28 ms

- Standard MPSC channel: 7 ms

Here’s the test code:

let = ;

let producer_buffer2 = producer_buffer.clone;

let producer = spawn;

let producer2 = spawn;

let consumer = spawn;

producer.join.unwrap;

producer2.join.unwrap;

consumer.join.unwrap;

Observations

I tried some micro-optimizations, like using bitwise operations instead of modulo:

And implementing an exponential backoff retry instead of a plain spin loop:

let mut backoff = 1;

let push_idx = loop ;

But these tweaks didn’t move the needle, my implementation is still about half as fast as the standard library’s MPSC channel.

Conclusion

Here’s the deal: no matter how much you polish micro-optimizations like bitwise indexing or exponential backoff, the real magic happens in the architecture. Rust’s standard MPSC channels and crossbeam lean on linked lists, which seem to dodge the contention bottlenecks my ring buffer hits. Building a lock-free ring buffer was a deep dive into atomics, memory ordering semantics, and cache-line padding. Totally worth it. If you’re curious, grab my code, benchmark it on your rig, and see where you can push it further!